Right now, I'm sitting in a small cottage in the rural expanse north of Stockholm, Sweden, and I can't think of a better place to write about our massive cultural fear of technology.

Okay, so "massive cultural fear of technology" may be a slight overstatement, but only just. If our major stories are any indicator, we're terrified. In recent years, the "person vs technology" brand of dystopia has become more prominent, and the increasing success of such stories has been a consistent trend for the last century. I want to take a minute to ask why digital-era dystopia is using the human vs technology form of conflict more often, and what that says about our cultural story.

The Rise of the Technological Dystopia

While many person vs. technology stories feature other types of conflict in prominent roles, it's also true that many stories feature a secondary or embedded person vs technology story. When looking for any story where person vs technology is a significant factor, we come to an impressive list.

Her, Robocop, Elysium, The Island, Transcendence, Surrogate, Orphan Black, Do Androids Dream of Electric Sheep (adapted into the film Bladerunner), Fahrenheit 451, Never Let Me Go (book and film), The Matrix, Vanilla Sky, Mass Effect, Total Recall, Minority Report, 2001: A Space Odyssey, Terminator, I Am Legend, Mindscan, Neuromancer, Brave New World, Avatar, AI, Gattaca, Twelve Monkeys, Slaughterhouse Five, The Time Machine, Half-Life 2, The Hunger Games, A Canticle for Leibowitz, "The Machine Stops," The Road, Zen and the Art of Motorcycle Maintenance, and I, Robot. And that's just me scouring my brain for a few minutes. I'm sure you can come up with plenty of titles I missed.

My point isn't just that there are a lot of these: It's that so many of them are famous. This type of narrative has become a significant part of our cultural psyche, but when and why did that happen? The when is easy enough to address: There's a less-than-subtle connection between the industrial revolution and increasing fear of technology in literature. Since then, however, the tension has shifted. It's no longer the external conflict of resenting technologies that are part of oppressive systems. Today, it's an internal conflict: The tension between being entirely dependent on technology and fearing both that dependence and the technology that enables us to live the way we do.

Losing Our Humanity

When I talk about "human vs technology," I'm not just talking about the category of literary conflict. I'm also referring to the increased prominence of a very direct conflict between our technologies and our human-ness. This poem does an excellent job of illustrating my point:

Maybe it's an overstated version of the idea, but this isn't the only rendition we have that showcases the ways we fear—or maybe just sense—that the things that were built to connect us are leaving us disconnected.

And have you ever tried to stop responding to your smartphone so quickly, only to find that you open it up to check notifications without any conscious thought? At some point along the way, the things we programmed started programming us.

This is the cultural fear in its current sense. Digital-era dystopia uses hyperbole to raise these topics for conversation, but they also do something more: They look down the path and capture a profound fear that our human-ness—already being encroached upon by our technologically saturated world—may someday vanish altogether.

The Singularity and Fear of the Dark

Vernor Vinge wrote a famed essay about the "coming Singularity." In the long and complicated discussion that's erupted since, the term "Singularity" has been used in a great many ways, but let's return to the original. Vinge's use of the term was a reference to the science of black holes. Matter attracted to black holes is pulled slowly at first but then more and more quickly, eventually crossing an event horizon. Past that point (to use the scientific terminology) shit gets crazy. In the center of the black hole is what we call a gravitational singularity: A place where space-time curvature becomes infinite and all our normal rules of physics break down.

Vinge was referring to a similar breakdown, but looking toward a point of infinite technological change rather than infinite gravity.

The notion is that each technological advancement increases the speed at which the next technological advancement occurs. Large-scale, broad-scope advances that previously took decades or even centuries can now happen in a matter of months or even weeks. Vinge theorizes about a point in time where the rate of discovery and implementation was so fast that all of our normal rules broke down. He predicted that this exponential growth would curve up sharply in the early 2000s, and that once this happened, we would be in a post-human era.

Whether Vinge's idea of a point of infinite technological advancement is at all feasible, it's true that technological growth has been and continues to accelerate at an unprecedented pace that makes the future harder and harder to predict.

And second, Vinge's notion of a post-human era (regardless of its factual validity), points toward the strong sense that technology is quickly becoming so complex, far-reaching, and omni-present that individual humans no longer have a sense of understanding or controlling the technology they use. That we are currently and progressively feeling disconnected from technologies that are simultaneously becoming more and more integral in our daily lives.

Will We Truly Be Post-Human?

The technological Singularity could happen in a number of ways, according to the theory's proponents. One is a super-intelligent AI, which could then quickly develop a super-super-intelligent AI, and so forth ad infinitum, until humans were functionally obsolete. This is the AI (artificial intelligence) theory. The second is that humans could become so integrated into the technology that the very definition of human would change. This is the IA (intelligence amplification) theory.

These ideas rely, more often than not, on taking the computational theory of mind for granted. Basically, this concept says that the brain is an amazingly powerful and complex computer, but that its basic functions are categorically similar to those completed by a computer processor. It's just that there are a lot of these functions (approximately 10^16 per second, according to some estimates). If this is true, the possibility of making a computer capable of human or super-human thought is far from absurd.

It's tempting for me to dive deeper into this topic, because I think it's a terrible assumption to take for granted. Simultaneously, I have to admit that the brain, on the whole, isn't understood particularly well. Any theory of mind or theory of consciousness has to rely on a metaphysical assumption at this stage of the game. Whether you believe in the computational theory of mind or believe that theory is bunk, you're acting on faith rather than facts.

With all of these unknowns and all of the complexities of the next tier of technology, we've reached a point where the mathematical has become mystical. For, as Arthur C. Clarke said, "Any sufficiently advanced technology is indistinguishable from magic."

To me, the rise of digital-era dystopia is defined by those fears: of losing control of our society, of losing connection, and of gradually losing our humanity. But what do you think? When you start tugging at the strings you realize how big of a topic this really is, and I'd love to hear your take on it.

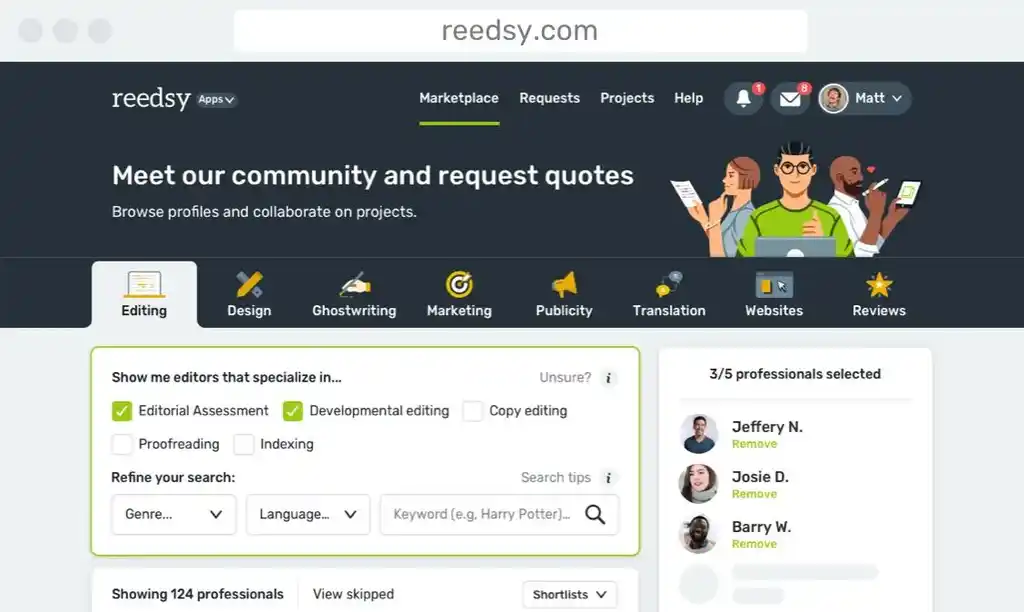

About the author

Rob is a writer and educator. He is intensely ADD, obsessive about his passions, and enjoys a good gin and tonic. Check out his website for multiple web fiction projects, author interviews, and various resources for writers.